How does RBL, known as the Real-time Blackhole List work?

What is an RBL, and How Does it Function? Realtime Blackhole List (RBL) is a common term used in the context of email and internet security. It functions as a DNS-based list of IP addresses known to be spam sources. When an email administrator queries an RBL, it checks whether the sender’s IP address matches any […]

Best Email hosting Service that Syncs your Calendar and Contacts

Email Hosting Services Overview Email hosting services are crucial for businesses looking to establish a professional online presence through a custom email address that matches their domain name. These services not only provide email accounts but also come with a range of features to enhance communication and productivity. What are the key features of a […]

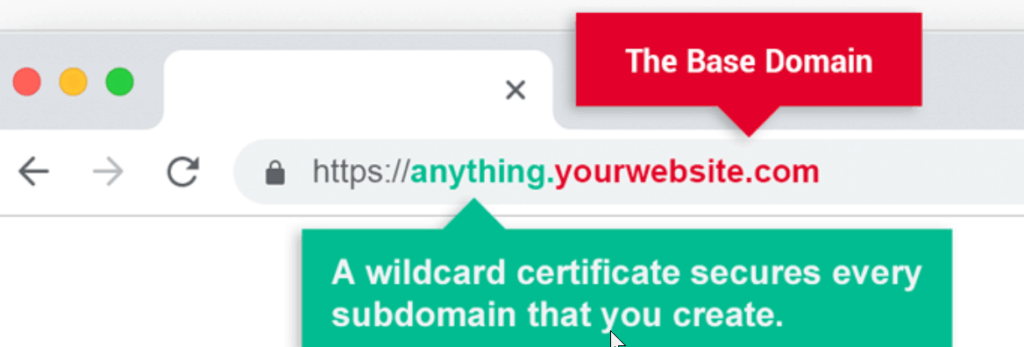

Does your Website Need an SSL Certificate?

In today’s digital age, website security is of utmost importance. With cyber threats on the rise, ensuring that your website is safe and secure for visitors is crucial. One of the key components of website security is the implementation of an SSL certificate. In this article, we’ll explore the significance of SSL certificates and why […]

The Free SSL vs Paid SSL Certificate, Which one to choose?

When it comes to securing your website and protecting the sensitive information of your visitors, SSL certificates play a crucial role. However, the decision to opt for a free SSL certificate or invest in a paid one can be a daunting task. In this article, we will delve into the differences between free and paid […]

Why You Should Use an Email Verifier

Email marketers understand the criticality of verifying their email list. Doing so keeps bounce rates low and the sender’s reputation intact. There are several ways to do this. One method involves using tools like PuTTY or Telnet to accurately pinging the mail server; however, this approach is cumbersome and could potentially harm your infrastructure. Real-time […]

Is ZSTD a better Backup Compression than GZIP?

Backup File Compression Backup file compression is a process of reducing the size of data files. It can be done using a variety of algorithms, such as gzip or zstandard (ZSTD). This type of data compression aims to reduce the size of backup files, making them easier to store and transfer. Compression algorithms are used […]

Tips to Speed Up Your WordPress website?

Are you looking for ways to Speed Up your WordPress website? If so, then you’ve come to the right place! Getting a hosting space with fast storage like NVMe SSD might not speed up your WordPress website. I’m sure that you have tried, hence you are searching for a solution In this blog post, we’ll […]

8 common VPS use Cases

Are you curious about what a VPS can do for you? Are you looking for reliable hosting options to power your business or website? If so, then this blog post is for you! We’ll look at five common uses of VPS in this post, and help you find the best option for your needs. What […]

What is VPS Hosting?

Are you curious about VPS hosting? This blog post is for you! We’ll explain VPS hosting, how it works, and why it might be the right choice for your website. So grab a cup of coffee, and let’s get started! What is VPS Hosting? VPS Hosting, or Virtual Private Server Hosting, is a web hosting […]

Why are keywords important for SEO?

Why are keywords important for SEO? Are you a content writer who wants to learn how to optimize your work for search engines? Do you want to drive more traffic and attention to your blog or website? If so, then understanding the importance of keywords is essential. This blog post will explore why keywords are […]